|

Ng Kian Wei I am a full-time data scientist at National University Health System (NUHS) in Singapore. Within NUHS, I manage the technical research and development portfolio for the NUHS Holomedicine Programme, with a focus on augmented reality, applied computer vision and medical image analysis. Working closely with clinicians and other healthcare professionals, many of the research output have or are undergoing transitions to clinical validation and early stage trials to prepare for wide-scale implementation and usage within hospitals. On top of my day job as a data scientist, I am also currently a PhD Student at the National University of Singapore, under the supervision of A/Prof Khoo Eng Tat. |

|

Much of my research deals with the intersection between augmented reality, applied computer vision and medical image analysis. That being said, the below publications and projects are grouped into a few main categories for ease of browsing, depending on the project's primary focus/contribution.

Augmented Reality |

|

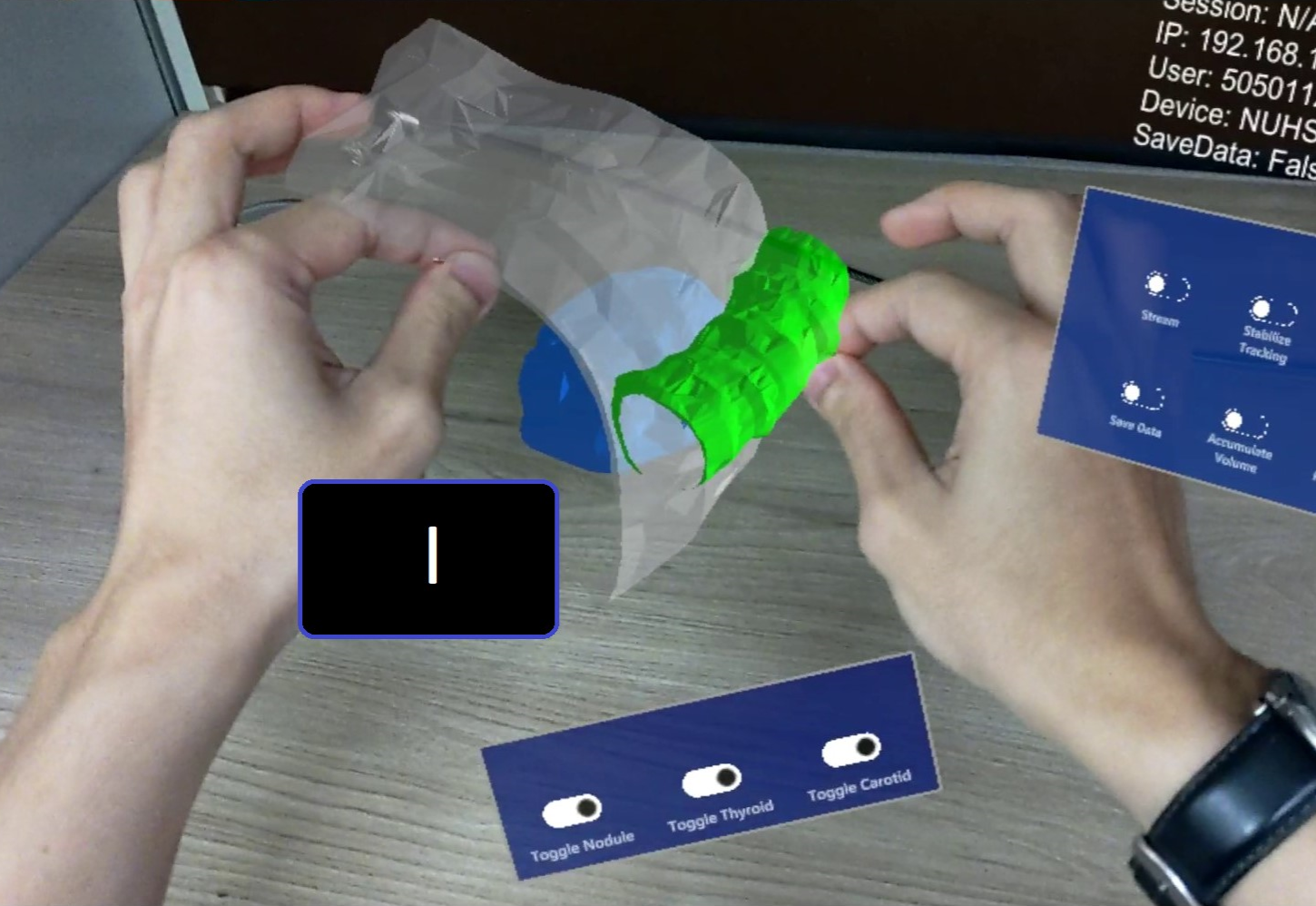

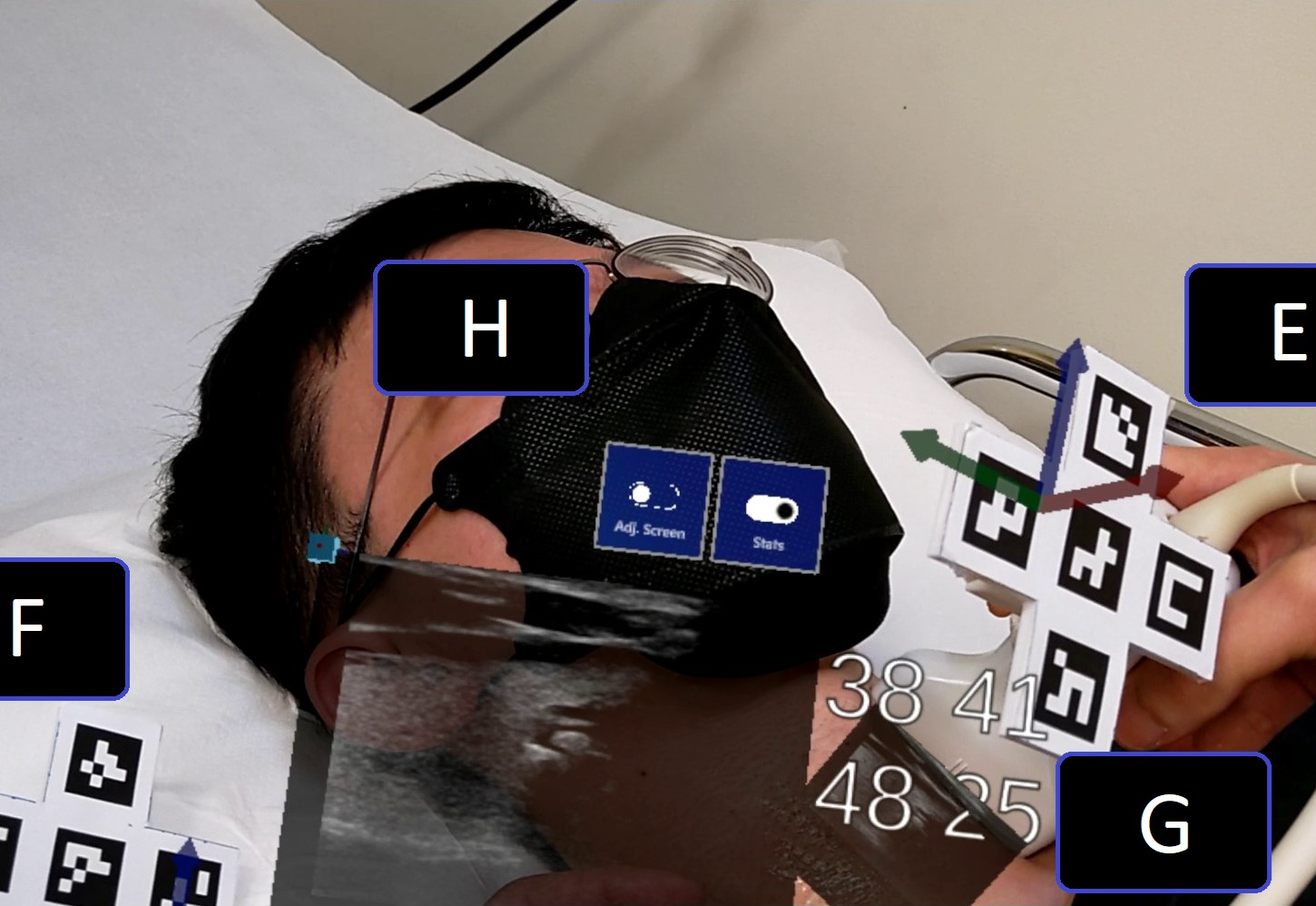

HoloPOCUS: Portable Mixed-Reality 3D Ultrasound Tracking, Reconstruction and Overlay

Kian Wei Ng, Yujia Gao, Mohammad Shaheryar Furqan, Zachery Yeo, Joel Lau, Kee Yuan Ngiam, Eng Tat Khoo ASMUS, 2023 We improve on existing mixed-reality ultrasound (MR-US) solutions by developing a portable system that is highly accurate (via stereo triangulation on denser ArUco chessboard keypoints). HoloPOCUS is also the first MR-US work to integrate 3D Ultrasound Reconstruction/Projection using conventional probes. |

|

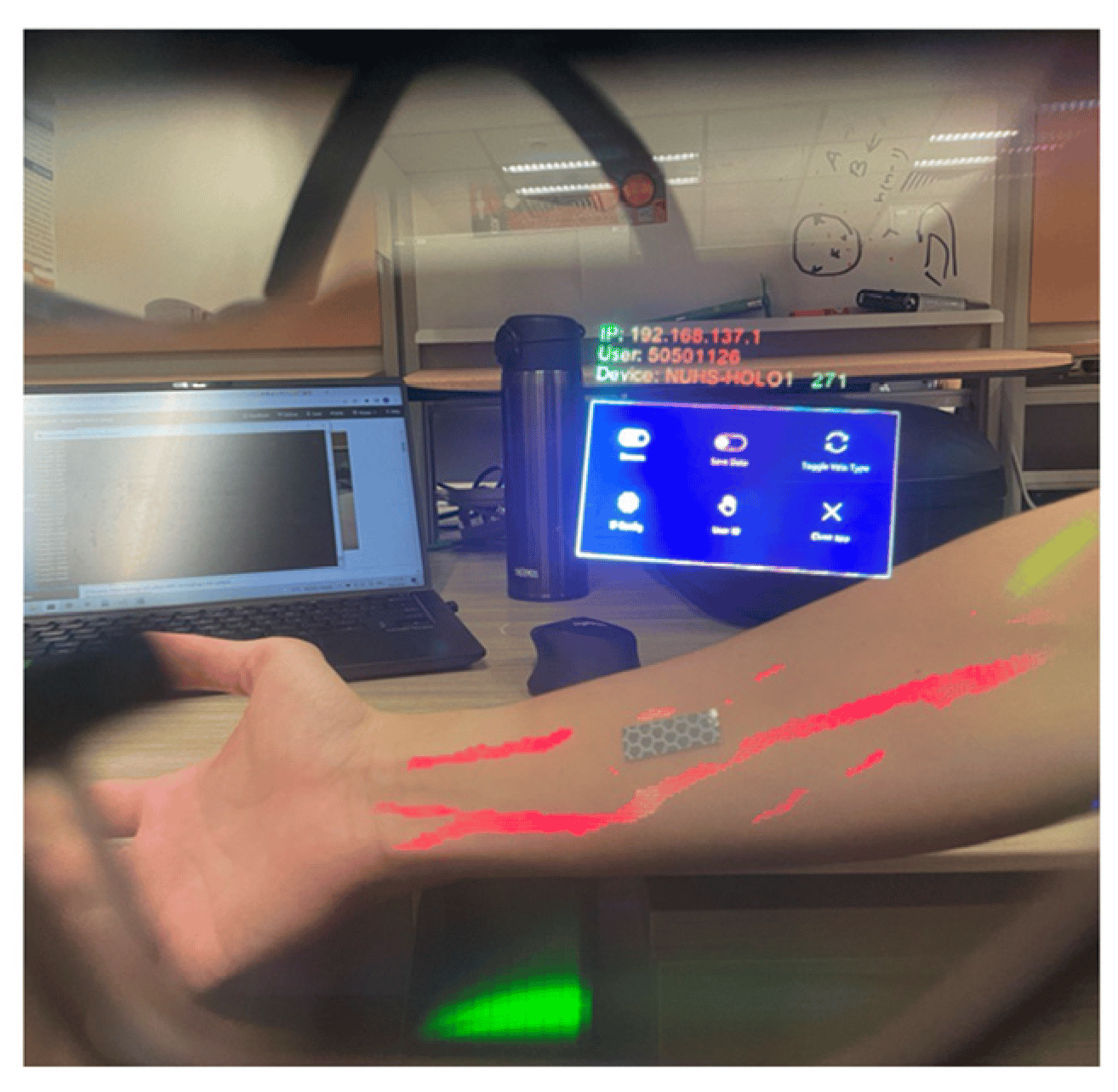

HoloVein — Mixed-Reality Venipuncture Aid via Convolutional Neural Networks and Semi-Supervised Learning

Kian Wei Ng, Mohammad Shaheryar Furqan, Yujia Gao, Kee Yuan Ngiam, Eng Tat Khoo Electronics, 2023 We utilized depth and infrared sensors on-board HoloLens 2 and developed an all-in-one mixed-reality vein finding and projection system. Spatio-temporal consistency was exploited to provide semi-supervised labels for data-efficient vein segmentation model training. |

Deep Learning |

|

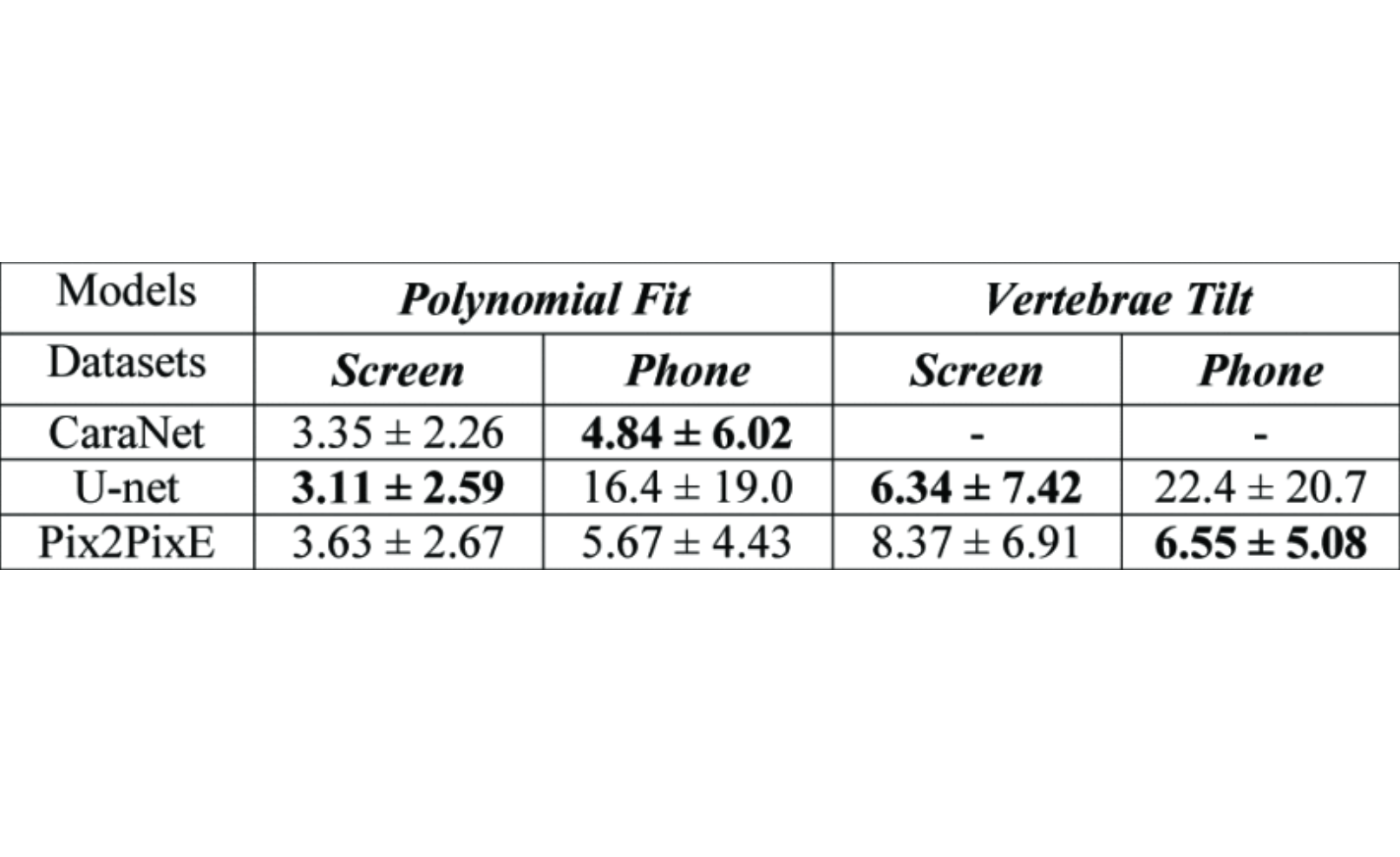

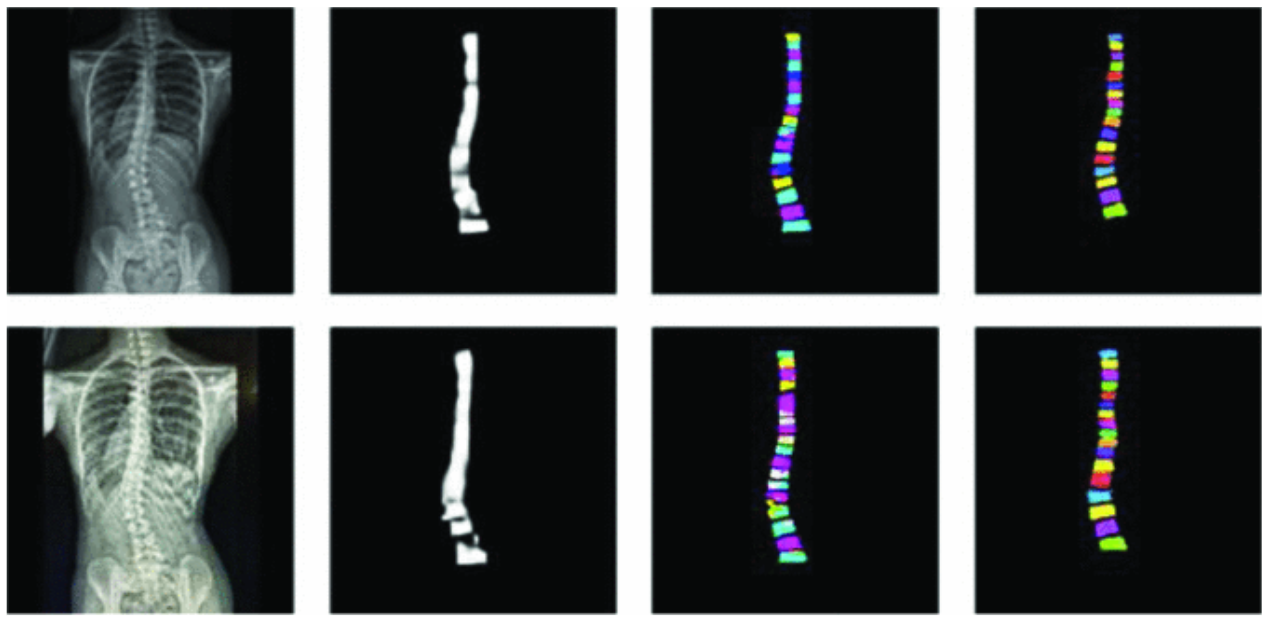

Vertebrae Segmentation with Generative Adversarial Networks for Automatic Cobb Angle Measurement

Ying Zhen Tan, Kian Wei Ng, Johnathan Tan, James Hallinan, Xi Zhen Low, Andrew Makmur, Kee Yuan Ngiam, Mohammad Shaheryar Furqan EMBC, 2024 We evaluate the use of Generative Adverserial Networks (GANs) in vertebrae segmentation on X-ray images. In particular, work was done to investigate if GANs could fare better than traditional models (e.g. UNet), when images were corrupted with artifacts acquired when X-ray images were further taken with phone cameras. This work paves the way as a feasibility study towards a more user-friendly, phone-based Cobb Angle Estimation solution. |

Others / Reviews |

|

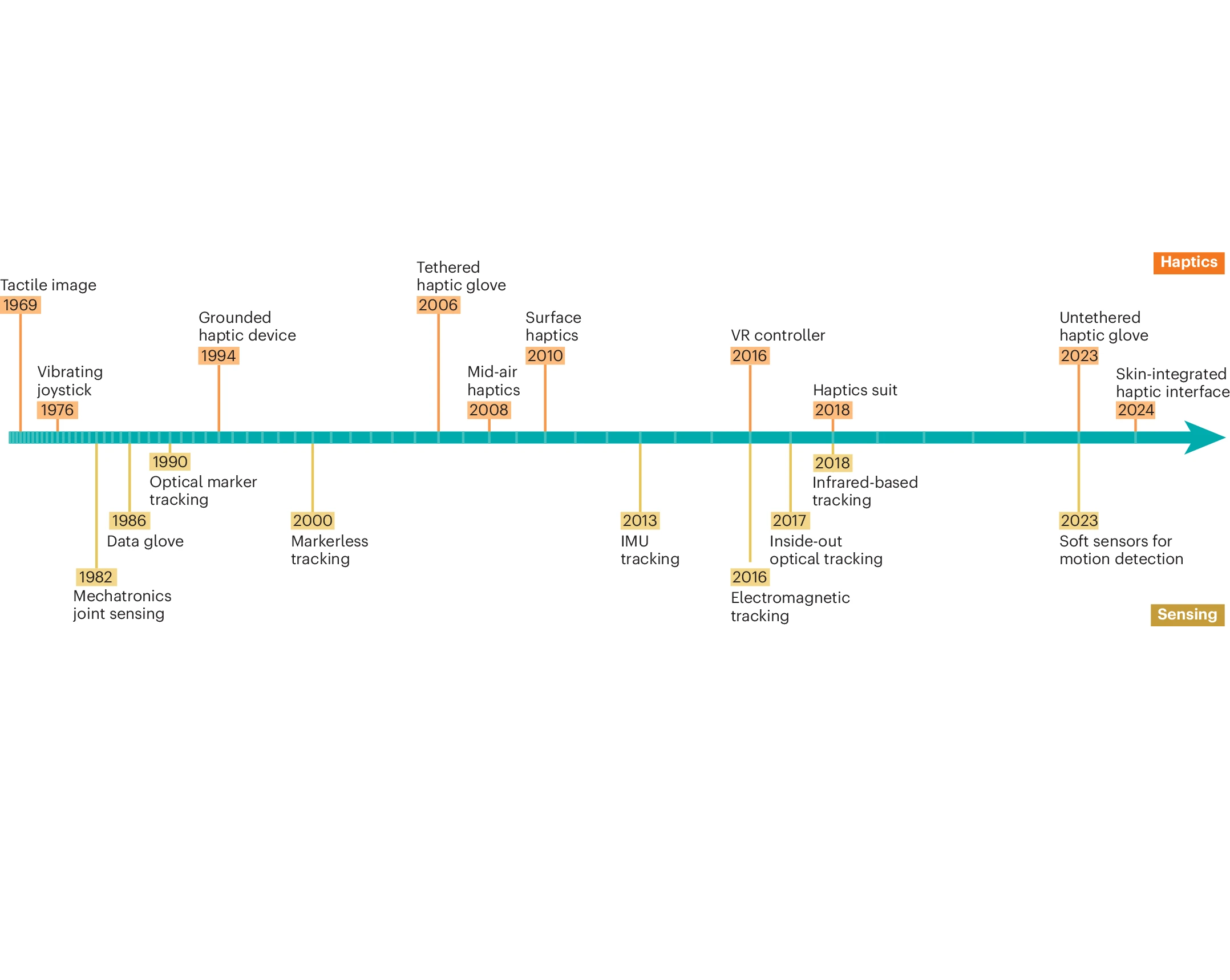

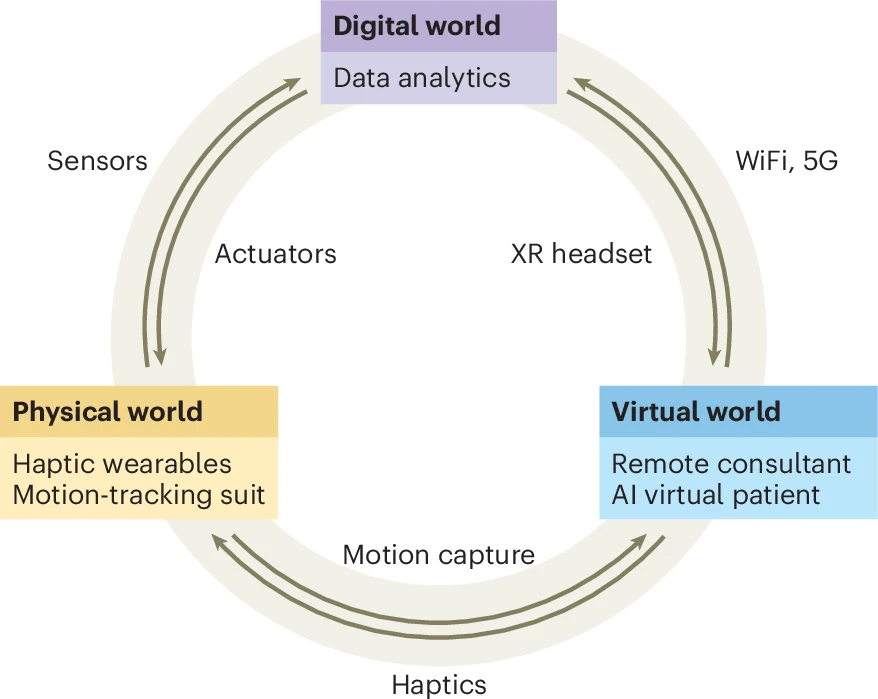

Bridging the digital–physical divide using haptic and wearable technologies

Jiaming Qi, Longteng Yu, Eng Tat Khoo, Kian Wei Ng, Yujia Gao, Alfred Wei Chieh Kow, Joo Chuan Yeo, Chwee Teck Lim Nature Electronics, 2024 The metaverse could provide an immersive environment that integrates digital and physical realities. However, this will require appropriate haptic feedback and wearable technologies. Here we explore the development of haptic and wearable technologies that can be used to bridge the digital–physical divide and build a more realistic and immersive metaverse. |

|

Website template courtesy of jonbarron. |